Custom web fonts allow you to make the website unique, emphasize its design and attract the attention of users. But the pay for these benefits is a long download site, which uses its own (custom) fonts. Especially if they are located on a remote host. What to do about it?

Below we will consider the main approaches to optimizing the web fonts: hosted hosting, Google API, the use of techniques for reducing the size and delayed loading of fonts.

Briefly, the optimization instruction looks like this:

- We collect the necessary set of fonts (leaving only the most necessary).

- We host it on a hosting or use the Google Fonts API.

- Configure caching and compression for font files.

- Set up delayed rendering and use localStorage.

A Brief History of Web Typography

It all began in 1995, when Netscape added support for the font tag and the ability to stylize the text of pages using a system font. This allowed using about 10 different fonts in all browsers. In 1997, Internet Explorer added support for the downloadable font in EOT format (which was rejected by all), this initiated the use of @ font-face as we know it now.

For the next 10 years, standards developers have focused on more global things, so ordinary webmasters had to "invent bicycles." The most common technique of "special" fonts on the pages of the site was the substitution of images, FLIR, (yes, hundreds of images were created with the "glyphs" of the designer font used to style the headers), Cufon (when JavaScript and vector graphics in SVG + VML) and sIFR on Flash. The latter approach worked in most browsers of that time, because Flash support was very wide (up to 98% in the best years).

In 2006, CTO Opera, Hakon Wium, launched a whole campaign against the use of the EOT format for web fonts, as Microsoft finished supporting the development of this format in 2002. As a result, support for alternative, typographic formats, TTF and OTF was added to most browsers, and by 2009 the use of font-face was practically approved in the current version: many different formats for different browsers.

The last milestone in 2010 was the appearance of the WOFF format, Web Open Font Format, which was able to collect the best of TTF and OTF, including out-of-box compression and additional meta-data, and the launch of the Google Fonts service, which became the de facto standard for connect non-standard fonts.

Why are web fonts so slow?

After the war of formats (WOFF is supported by 94% of all browsers), web fonts started the war for speed. During the development of the format, it was possible to agree on the connection of different font files for different browsers according to their support for these formats: this already solved a significant part of the problems (for comparison, one can recall the recent times when all possible styles for all browsers were written to the style file do not upload them as separate files).

But this did not solve the main performance problem: downloading fonts significantly blocked the rendering of the site pages, and with a large number of different fonts the site was terribly slow. There are several reasons for this:

- Large size of font files: one non-standard font "weighs" like all site styles (font - binary vector data of a set of letters, glyphs, and styles are plain text, CSS code). Several non-standard fonts of several types already significantly block the rendering of the pages of the site.

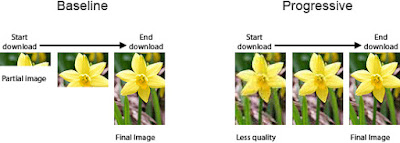

- Blocking page rendering: text on a website for which a non-standard font is assigned can be displayed only after downloading this font in the browser (after all it can be icons or bar codes). Because of this in most cases, the user sees a white screen in the browser until the necessary fonts have loaded (and they can be very large).

Using fonts

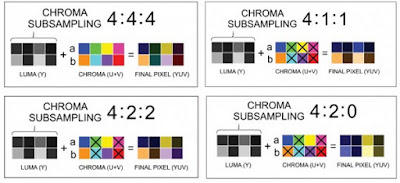

Custom web fonts, most likely, are absent in the user's browser. Therefore, you need to specify additional files to upload, which will contain technical information on the font design (characters - glyphs, features of the common drawing of characters and lines and other data). As it often happens, each browser needs its own file - Google Chrome understands the format of woff and woff2 (the most advanced), the old Android understands only TTF, and IE - eot.

Additionally, you can take into account that in rare cases the font can already be installed on the user's device: in this case you can use the local directive. The detailed CSS instruction that connects the corresponding font on the site will look something like this (we use different formats for "hitting" in different browsers):

@ font-face {

font-family: 'Awesome Font';

font-style: normal;

font-weight: 400;

src: local ('Awesome Font'),

url ('/fonts/awesome.woff2') format ('woff2'),

url ('/fonts/awesome.woff') format ('woff'),

url ('/fonts/awesome.ttf') format ('ttf'),

url ('/fonts/awesome.eot') format ('eot');

}

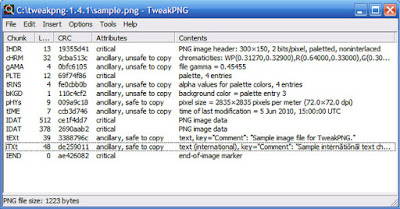

In this case, all font files are hosted. To support all browsers, you usually use a set of font files for each font (headset). It can be created, for example, via Font Squirrel: the service will automatically provide the CSS-code and a set of files for placement on the server.

Alternatively, you can use the Google Fonts service: you insert a service script call to the site, and depending on the browser you are using, the service already gives the appropriate CSS code and font files (already split into languages), supporting more than 30 different combinations.

But the main problem with downloading your own fonts is not solved: the fonts are large. Sometimes it's too big. And almost always they are required to display the text on the page (ie the font download is at the "white screen" stage, annoying users as much as possible). How can this be cured?

Google Fonts API

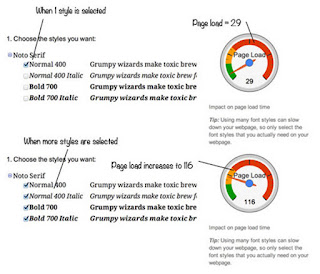

The first and most important step in optimization is to leave only the necessary fonts. Inventory of fonts (removal of unused sites or maximum trimming used in actually used symbols). Also you need to select not only fonts, but also their variants (usual, italic, bold).

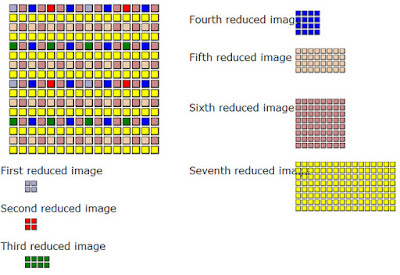

The Google Fonts API allows you to download only the desired font options, and also loads fonts by character sets (allows browsers not to load the full font, if not all the glyphs are required to display the page). Among the additional features is the text = {letters} parameter, which cuts characters in the downloaded font strictly to the specified ones (if the font is used only for the logo, this can be very valuable).

Optimizing font size

Optimizing the font size consists of three basic things: to provide backwards compatibility, enable compression and eliminate unused glyphs. Before the optimization, you need to revise the set of non-standard fonts used and leave only those that are really needed for the site.

- Backward compatibility. To maximize the speed of displaying your site's text on any user device, you need to tell the browser which spare font family can be used if your own font is not available (not loaded yet or an unsupported format is loaded). To do this, in the font-face directive, after the name of your font, you need to specify the most suitable system alternative with a mandatory ending serif (serif font), sans-serif (without serifs) or monospace (equal width). Although backward compatibility leads to FOUT, but this alternative is better than the invisible text on the site (FOIT).

- Compressing fonts. If you use static compression, then it is enough to prepare archives with font files and place them next to the font files. With dynamic compression, check that all the main font formats - EOT, TTF, OTF, SVG, WOFF and WOFF2 - are given from the hosted compressed (compression test). If this is not the case, you need to add the required extensions or file types to the compression rules. Gzip (or zopfli) compression allows you to reduce the font size by 15-50%.

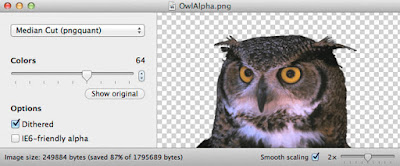

- Remove glyphs. To display text on a site, not all the characters included in the default font are usually required. Some of them refer to another language (for example, to Chinese), some to special symbols that you do not use. There are a lot of tools for removing from fonts unused glyphs and Windows/Mac utilities. The most popular ones are Font Squirrel (in Advanced mode), as well as Subset.py and FontPrep. Also, Google Fonts allows you to load only the used character sets. This optimization method allows you to reduce the resulting file by another 10-50%.

Together, all three methods allow you to significantly speed up the display of the site on all devices, regardless of the beauty of the used fonts and are well automated: for example, Airee Cloud uses the second and third optimization methods, reducing the size of hosting fonts by 20-80%.

Delayed loading of fonts

There are several approaches that allow you to apply some "magic" to web fonts load to minimize the negative effects.

The first is hard caching files (for ordinary users) and base64-representations (in localStorage, for mobile users). Reception works only for users who returned to the site, but for them it allows to significantly reduce download time (Detailed instruction on using localStorage).

The second is to use the Font Loading API (not supported by all browsers). When the page is initially loaded, you can display the text in the font specified by the user, load the required font asynchronously, and spend several tens of milliseconds (almost invisible "hang" of the browser) on redrawing the page as the font is ready. There are libraries for the automation of the process, one of them is presented here.

And the third one is prefetching fonts (if they are not used from the first page of users: for example, in a personal account). To do this, the prefetch technique, which is already very well supported by browsers, is suitabl: http://caniuse.com/#feat=link-rel-prefetch).

For a deeper immersion in the topic, we recommend reading the article from Google on the topic of font optimization.

Speed up the loading of fonts

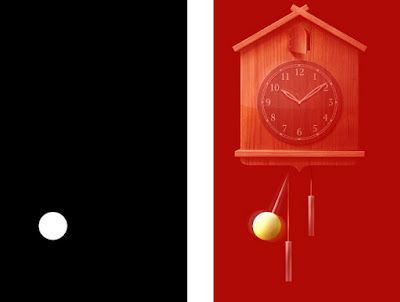

Web developers have introduced several abbreviations describing the situation when downloading fonts on the site. This is FOIT (Flash of Invisible Text) - invisible text on the page due to the lack of font in the browser to display it (in some cases it reduces to the appearance of squares in place of the icons), FOUT (Flash of Unstyled Text) - drawing the text in the wrong (spare) headset because of the lack of font and FOFT (Flash of Faux Text) - drawing text in a fake headset (false-inclined and pseudo-bold), based on the usual font style in the absence of special traces.

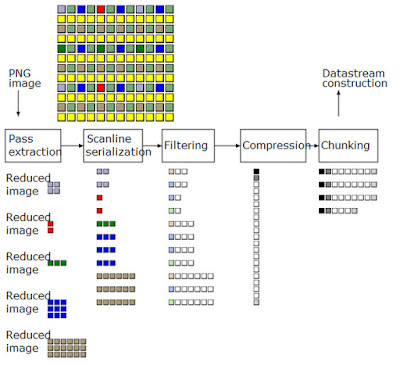

The mechanisms for working with the order of loading fonts in the browser are already well described, so we'll give the final schema:

We will analyze the final versions of this scheme with some applied improvements.

Practical recommendations

To avoid FOIT on the page, minimize the time FOUT or FOFT, you must apply the following measures to download font files:

- Add to all non-iconic definitions spare options that best match the desired headset. To date, in addition to a large set of "standard" fonts, browsers also support generic serif (serif), sans-serif (serif), monospace (monospace), cursive (handwriting), fantasy (decorative). Each headset assignment in CSS (via font* rules) must end with one of them. For example:

font-family: "Avenir Next Cyr", Tahoma, sans-serif;

- Add to the directive for @ font-face the instant text display rule with a spare font option (this is suitable for non-iconic fonts):

fon\t-display: swap

- The best technique for the fastest download of a font file in the browser is the preload tag, which makes it possible to ensure that the font files are ready by the time the page is rendered (after loading the styles and blocking scripts). It is supported by 68% of browsers (https://caniuse.com/#search=preload). For example:

<link rel="preload" as="font" href="/assets/fonts/AvenirNextCyr-BoldItalic.woff" type="font/woff" crossorigin>

- To emulate preload in the remaining 30% of browsers and more stringent font caching via localStorage, you can preload the font via XHR. The script, if inserted at the top of the page, in the head, allows you to call the necessary font files and caches them in localStorage. Prefont files (style rules) must be converted to base64 for caching in localStorage (binary data can not be used). With this approach, you need to wrap the preload tags in

<noscript>to avoid double loading the font. - To eliminate FOIT for icon fonts, you can use Font Face Observer and optionally enter a CSS class for the downloaded font, initializing the font-face rule and assigning it to html or body. In this case, without a font in the browser, the squares will not be drawn instead of the icons, and immediately after the font is loaded, the icons will appear on the page.

- To reduce FOUT time when loading all the headset inscriptions (normal-bold, normal-inclined, bold-oblique, etc.), you can use FOFT and set the main font to the only one in normal outline in another font-family: in the LatoInitial example. After verifying the loading of all other traces (it is possible in asynchronous mode), Font Face Observer uses classes that correct false font faces for correct ones.

The question of using FOFT to accelerate the display of text is debatable (in many cases it is easier to rely on spare fonts), but can help you in a number of content projects.